Sign up to save your library

With an OverDrive account, you can save your favorite libraries for at-a-glance information about availability. Find out more about OverDrive accounts.

Find this title in Libby, the library reading app by OverDrive.

Search for a digital library with this title

Title found at these libraries:

| Library Name | Distance |

|---|---|

| Loading... |

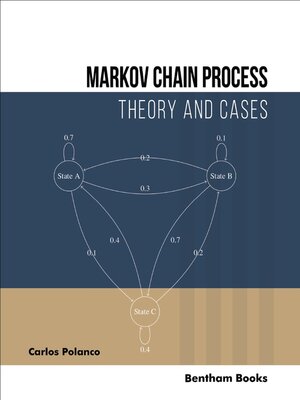

Markov Chain Process: Theory and Cases is designed for students of natural and formal sciences. It explains the fundamentals related to a stochastic process that satisfies the Markov property. It presents 10 structured chapters that provide a comprehensive insight into the complexity of this subject by presenting many examples and case studies that will help readers to deepen their acquired knowledge and relate learned theory to practice. This book is divided into four parts. The first part thoroughly examines the definitions of probability, independent events, mutually (and not mutually) exclusive events, conditional probability, and Bayes' theorem, which are essential elements in Markov's theory. The second part examines the elements of probability vectors, stochastic matrices, regular stochastic matrices, and fixed points. The third part presents multiple cases in various disciplines: Predictive computational science, Urban complex systems, Computational finance, Computational biology, Complex systems theory, and Computational Science in Engineering. The last part introduces learners to Fortran 90 programs and Linux scripts. To make the comprehension of Markov Chain concepts easier, all the examples, exercises, and case studies presented in this book are completely solved and given in a separate section. This book serves as a textbook (either primary or auxiliary) for students required to understand Markov Chains in their courses, and as a reference book for researchers who want to learn about methods that involve Markov Processes.