Alpaca Fine-Tuning with LLaMA

ebook ∣ The Complete Guide for Developers and Engineers

By William Smith

Sign up to save your library

With an OverDrive account, you can save your favorite libraries for at-a-glance information about availability. Find out more about OverDrive accounts.

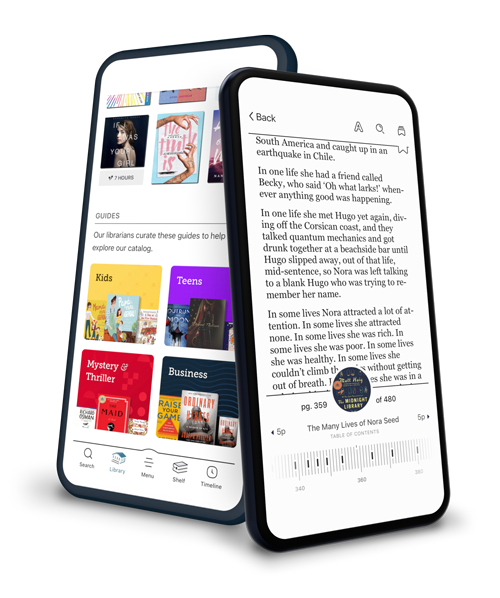

Find this title in Libby, the library reading app by OverDrive.

Search for a digital library with this title

Title found at these libraries:

| Library Name | Distance |

|---|---|

| Loading... |

"Alpaca Fine-Tuning with LLaMA"

"Alpaca Fine-Tuning with LLaMA" is a comprehensive, expert-level exploration of the mechanics and methodology behind instruction-tuned large language models, with a particular focus on the foundational LLaMA architecture and its influential Alpaca variant. The book begins by guiding readers through the evolution and engineering innovations of LLaMA, situating it within the competitive LLM landscape through rigorous technical comparisons to models like GPT and Vicuna. Foundational concepts such as pretraining regimes, scaling laws, and the theory and practicalities of instruction tuning are elucidated alongside a detailed examination of emergent model capabilities and contemporary alignment challenges.

Progressing beyond theory, the book offers practical, scalable recipes for infrastructure setup, data engineering, and end-to-end fine-tuning pipelines. Readers gain actionable expertise in advanced hardware design, distributed cluster orchestration, and optimized throughput, all while balancing costs and environmental impacts. Thorough coverage of data sourcing, quality assurance, synthetic data generation, and robust metadata tracking is complemented by hands-on instruction for supervised fine-tuning workflows, parameter-efficient techniques like LoRA, multi-domain adaptation, and distributed training strategies tailored for both cloud-scale and federated deployments.

Recognizing the complexities of putting fine-tuned models into responsible production, the book closes with authoritative chapters on evaluation methodology, alignment via human feedback and RLHF, ethical considerations, and lifecycle management. It provides practical insights on real-time inference, monitoring, security, feedback loops, and safe continuous improvement, concluding with a forward-looking survey of continual learning, cross-lingual and multimodal innovations, and the collaborative open-source ecosystem driving the field forward. "Alpaca Fine-Tuning with LLaMA" is an indispensable technical guide and visionary resource for machine learning practitioners, researchers, and architects shaping the next wave of instruction-following AI.