LoRA Techniques for Large Language Model Adaptation

ebook ∣ The Complete Guide for Developers and Engineers

By William Smith

Sign up to save your library

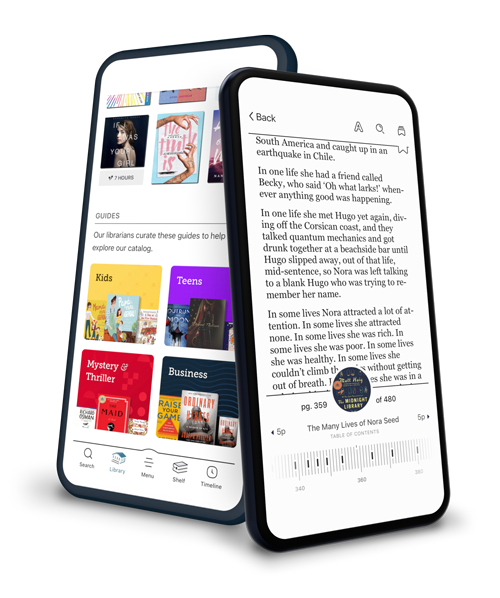

With an OverDrive account, you can save your favorite libraries for at-a-glance information about availability. Find out more about OverDrive accounts.

Find this title in Libby, the library reading app by OverDrive.

Search for a digital library with this title

Title found at these libraries:

| Library Name | Distance |

|---|---|

| Loading... |

"LoRA Techniques for Large Language Model Adaptation"

"LoRA Techniques for Large Language Model Adaptation" offers a comprehensive deep dive into the principles, mechanics, and practicalities of adapting large language models (LLMs) using Low-Rank Adaptation (LoRA). Beginning with an insightful overview of the evolution and scaling of LLMs, the book systematically addresses the challenges inherent in adapting foundation models, highlighting why traditional fine-tuning methods often fall short in efficiency and scalability. Drawing on real-world use cases and the burgeoning adoption of LoRA across both research and industry, it situates readers at the cutting edge of parameter-efficient fine-tuning techniques.

The work stands out for its rigorous treatment of the mathematical and engineering foundations underpinning LoRA. Through detailed explorations of low-rank matrix decomposition, formal parameter mappings, and empirical strategies for rank selection, readers gain a robust understanding of both the theoretical expressivity and practical impact of LoRA compared to other adaptation techniques. The text moves beyond the abstract, offering actionable guidance for integrating LoRA into modern transformer architectures, optimizing training for scalability and resource constraints, and leveraging composable and hybrid approaches to meet diverse adaptation goals.

Bridging theory and application, the book culminates in advanced chapters on operationalizing LoRA in real-world settings, evaluating adaptation effectiveness, and innovating for next-generation language models. It presents a rich collection of strategies for serving LoRA-augmented models in production, maintaining long-term adaptability, and meeting the needs of privacy-conscious environments. Through tutorials, case studies, and a survey of open-source tools, "LoRA Techniques for Large Language Model Adaptation" provides a definitive resource for machine learning practitioners, researchers, and engineers seeking to master the art and science of efficient large model adaptation.