Exploiting Environment Configurability in Reinforcement Learning

ebook ∣ Frontiers in Artificial Intelligence and Applications

By Alberto Maria Metelli

Sign up to save your library

With an OverDrive account, you can save your favorite libraries for at-a-glance information about availability. Find out more about OverDrive accounts.

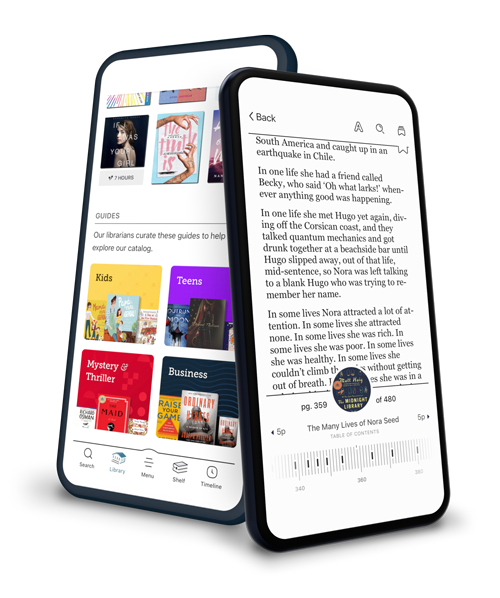

Find this title in Libby, the library reading app by OverDrive.

Search for a digital library with this title

Title found at these libraries:

| Library Name | Distance |

|---|---|

| Loading... |

In recent decades, Reinforcement Learning (RL) has emerged as an effective approach to address complex control tasks. In a Markov Decision Process (MDP), the framework typically used, the environment is assumed to be a fixed entity that cannot be altered externally. There are, however, several real-world scenarios in which the environment can be modified to a limited extent. This book, Exploiting Environment Configurability in Reinforcement Learning, aims to formalize and study diverse aspects of environment configuration. In a traditional MDP, the agent perceives the state of the environment and performs actions. As a consequence, the environment transitions to a new state and generates a reward signal. The goal of the agent consists of learning a policy, i.e., a prescription of actions that maximize the long-term reward. Although environment configuration arises quite often in real applications, the topic is very little explored in the literature. The contributions in the book are theoretical, algorithmic, and experimental and can be broadly subdivided into three parts. The first part introduces the novel formalism of Configurable Markov Decision Processes (Conf-MDPs) to model the configuration opportunities offered by the environment. The second part of the book focuses on the cooperative Conf-MDP setting and investigates the problem of finding an agent policy and an environment configuration that jointly optimize the long-term reward. The third part addresses two specific applications of the Conf-MDP framework: policy space identification and control frequency adaptation. The book will be of interest to all those using RL as part of their work.