Quantization Methods for Large Language Models From Theory to Real-World Implementations

ebook

By Anand Vemula

Sign up to save your library

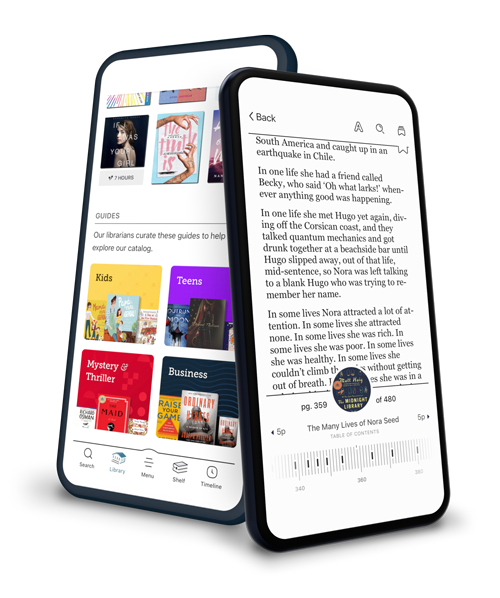

With an OverDrive account, you can save your favorite libraries for at-a-glance information about availability. Find out more about OverDrive accounts.

Find this title in Libby, the library reading app by OverDrive.

Search for a digital library with this title

Title found at these libraries:

| Loading... |

The book provides an in-depth understanding of quantization techniques and their impact on model efficiency, performance, and deployment.

The book starts with a foundational overview of quantization, explaining its significance in reducing the computational and memory requirements of LLMs. It delves into various quantization methods, including uniform and non-uniform quantization, per-layer and per-channel quantization, and hybrid approaches. Each technique is examined for its applicability and trade-offs, helping readers select the best method for their specific needs.

The guide further explores advanced topics such as quantization for edge devices and multi-lingual models. It contrasts dynamic and static quantization strategies and discusses emerging trends in the field. Practical examples, use cases, and case studies are provided to illustrate how these techniques are applied in real-world scenarios, including the quantization of popular models like GPT and BERT.